Shifting Left: when and how to conduct performance tests?

By Ziv Kalderon, Engineering Team Lead at ActiveFence

In the world of software development and maintenance, performance testing is an essential component, especially when there are strict Service Level Agreements (SLAs) in place. Failure to meet these SLAs can have significant consequences for clients, resulting in instability and loss of trust. For ActiveFence, our customers’ reliance on our system places a higher emphasis on the need for stable and consistent performance. In this blog post, we will explore the critical role of performance testing in maintaining a reliable and efficient software system.

What is performance testing?

Incorporating performance testing into the software development life cycle is key to ensuring that SLAs are met and that user expectations are exceeded. By simulating various scenarios, performance testing can identify potential bottlenecks, errors, or weaknesses in the system that can negatively impact the user experience. This way, performance testing helps ensure that the system can handle the expected workload and maintain the desired response time under varying conditions. It also provides data-driven insights that can be used to optimize the system for better performance in the future.

The different types of performance tests

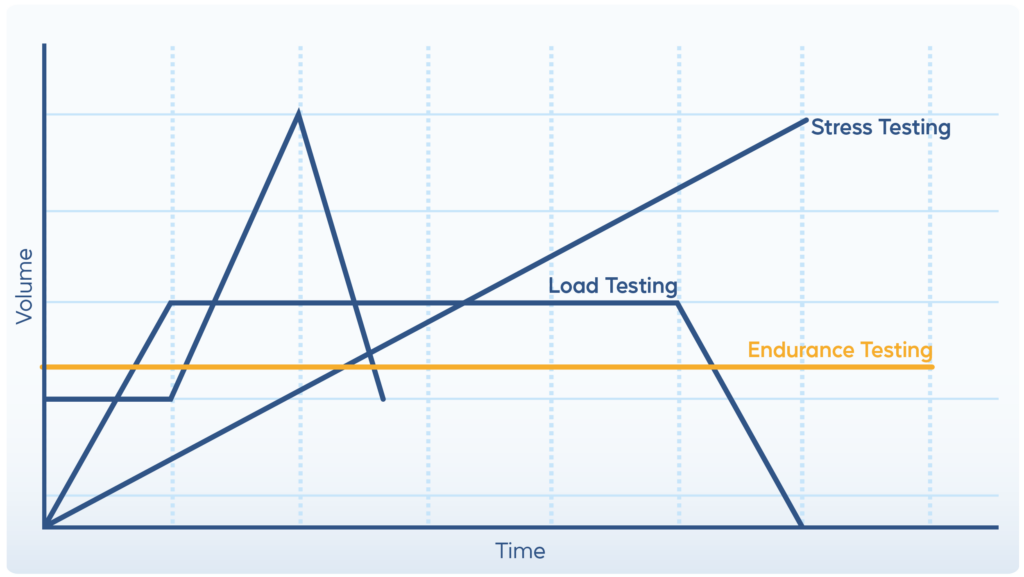

Performance tests include a suite of tests that are used to evaluate the performance of a software application under different levels of load or stress. Each type of load test has a unique purpose and can provide valuable insights into system performance under different conditions:

- Load: establishes the performance of the system under normal operating conditions.

- Stress: evaluates the system’s ability to handle high levels of concurrent users or transactions.

- Spike: simulates sudden and drastic increases in traffic to the system.

- Endurance: evaluates the system’s performance over an extended period of time, typically for several hours or days. Can be used for finding memory leaks.

- Scalability: measures the system’s ability to handle an increasing workload by adding more resources, such as servers or databases.

What can performance tests measure?

Performance tests require us to put a demand on the system and measure the response. Those two things can be automated by different tools in our development lifecycle. In order to gain the maximum value from these tests, product managers and engineers need to work together to define exactly what is expected from the system.

Performance tests can measure the following:

- Response time: the time it takes for the system to respond to a user request.

- Throughput: the number of transactions or requests the system can handle per unit of time.

- Resource utilization: the amount of system resources, such as CPU, memory, and disk, used during the test – affecting the price

- Error rates: the percentage of failed or erroneous transactions or requests.

- Concurrent user capacity: the maximum number of users that the system can handle simultaneously.

- Network latency: the time it takes for data to be transmitted between the system and its users or external services.

Choosing the right performance test tools

When choosing a load testing tool, it’s important to consider the specific needs of the application and the resources available for testing. By carefully evaluating different tools based on their features, scalability, and cost, it’s possible to select the best tool for the job and ensure accurate and effective load testing. Some considerations in choosing load testing tools include:

- Protocol Support: The tool must support the protocols used by the application to ensure that it can accurately simulate user behavior and provide meaningful data.

- Scalability: The tool should be able to scale up to simulate the expected load on the system.

- Reporting: The tool should provide clear and detailed reports on the results of the test.

- Ease of Use: The tool should be easy to use and configure, with intuitive interfaces and clear documentation.

- Cost: The tool should fit within the budget allocated for load testing.

- Running environment: Where you can run the load tests and how easy for you to integrate it into your system

- Automate result analysis: The option to automate the analysis of the results (for example, less than X% of error rate) is a critical capability for the option to automate the entire performance tests flow

Some well-known open-source performance test tools include:

- Apache JMeter: Supports multiple protocols and can be used for load testing, functional testing, and regression testing.

- K6: Tool for load testing, stress testing, and performance testing. Uses JavaScript as its scripting language.

- Gatling: An open-source tool written in Scala that supports HTTP protocols and can be used for load testing and performance testing.

- Locust: Performance testing tool. Uses Python.

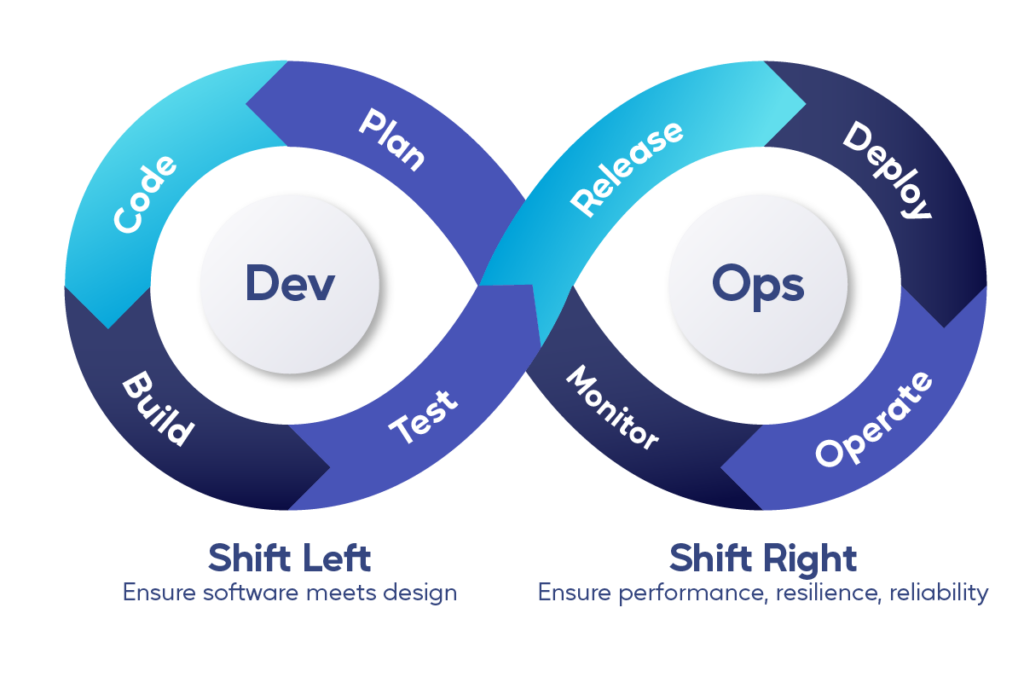

Shifting Left with performance tests

An increasingly common term in software development is “shift left,” that is, the concept of moving certain activities to earlier in the development lifecycle. By shifting performance tests left, teams can catch and address issues sooner.

Why shift left?

In the same way that you don’t deploy to production without having written unit tests, you need to see how the system reacts to your new code under load before deploying it to production. Here are a number of reasons why this practice should be adopted:

- Catching issues earlier: By conducting performance tests earlier in the development lifecycle, the team can catch issues before they become bigger problems. This can save time and effort in the long run, as it’s typically easier to address issues earlier in the process.

- More accurate results: When performance tests are conducted later in the process, it can be difficult to accurately simulate real-world usage patterns. Shifting left with performance tests allows the team to test against a more realistic environment, which can lead to more accurate results.

- Faster feedback: When performance tests are conducted earlier in the process, the team can get feedback more quickly. This means they can address issues and make changes more quickly, which can help keep the project on track and ensure that the final product meets performance requirements.

How to shift your performance tests left

To shift left with performance tests, teams will need to take a few critical steps, outlined below:

- Set up a testing environment: The first step is to set up a testing environment that mirrors the production environment as closely as possible. One aspect of the environment to consider is the amount of data that already has in the system that may affect the performance. Beware that having a dedicated load test environment costs money and isn’t an obvious decision in all organizations. On the other hand, running performance tests only on production will affect the system’s stabilization.

- Develop test scenarios: Based on the selected tool, you will need to write your test scenario. My best tip here is to keep the tests as simple as possible but keep in mind that the test scenarios need to simulate real-world usage patterns.

- Integrate performance tests into the development process: Once the testing environment and test scenarios are in place, You will need to integrate performance testing into the development process. This could involve running automated tests as part of the CI process or conducting manual tests at regular intervals.

- Analyze results and make changes: Finally, You will need to analyze the results of the performance tests and make changes as necessary. The ability to automate this step is critical for the option to automate the entire process. If the results are still meeting your SLAs, then everything is good, but if not, this information can be used to optimize the system’s architecture and resources, improve user experience, and ensure that the application can meet its performance goals. It is easier to detect which code changes affected the performance of the system if we run the load tests frequently.

Conclusion

Shifting left with performance tests is a valuable strategy for any team that’s looking to improve the quality and performance of their applications. By conducting performance tests earlier in the development process, teams can catch issues earlier, get more accurate results, and get feedback more quickly. In order to successfully shift-left, be sure to set up a testing environment, develop test scenarios, integrate performance tests into the development process, analyze results and make changes as necessary. At ActiveFence, shifting left helped us has helped us reduce costs by doing optimization offline during the development process as opposed to catching issues later in production. For example, by running load tests, we found issues with our garbage collection. The continued load in the test surfaced these issues, which would have been invisible without a load test. By catching this issue, we managed to reduce the CPU utilization dramatically and reduce our costs before deploying to production.

Are you interested in optimization, performance, and all things backend? Come join us!